The greatest risk to public health from microbes in water is associated with consumption of drinking-water that is contaminated with human and animal excreta, although other sources and routes of exposure may also be significant.

Waterborne outbreaks have been associated with inadequate treatment of water supplies and unsatisfactory management of drinking-water distribution. For example, in distribution systems, such outbreaks have been linked to cross-connections, contamination during storage, low water pressure and intermittent supply. Water-borne outbreaks are preventable if an integrated risk management framework based on a multiple-barrier approach from catchment to consumer is applied. Implementing an integrated risk management framework to keep the water safe from contamination in distribution systems includes the protection of water sources, the proper selection and operation of drinking-water treatment processes, and the correct management of risks within the distribution systems (for further information, see the supporting document Water safety in distribution systems; Annex 1).

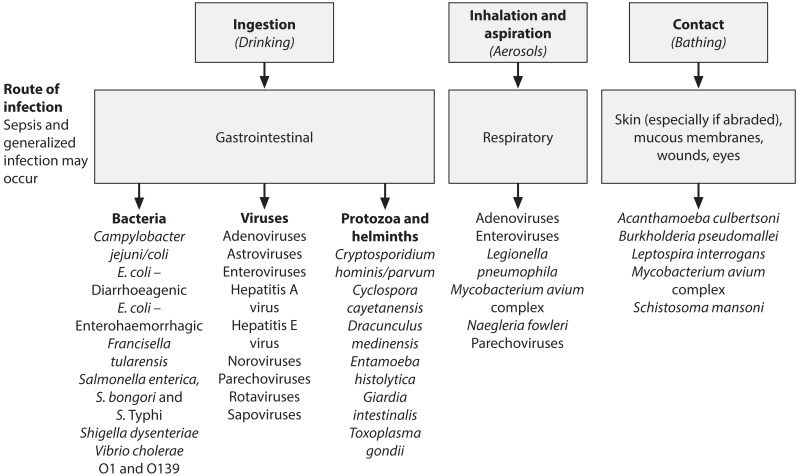

This chapter focuses on organisms for which there is evidence, from outbreak studies or from prospective studies in non-outbreak situations, of diseases being caused by ingestion of drinking-water, inhalation of water droplets or dermal contact with drinking-water and their prevention and control. For the purpose of the Guidelines, these routes are considered waterborne.

Chapter 11 (Microbial fact sheets) provides additional detailed information on individual waterborne pathogens, as well as on indicator microorganisms.

7.1. Microbial hazards associated with drinking-water

Infectious diseases caused by pathogenic bacteria, viruses and parasites (e.g. protozoa and helminths) are the most common and widespread health risk associated with drinking-water. The public health burden is determined by the severity and incidence of the illnesses associated with pathogens, their infectivity and the population exposed. In vulnerable subpopulations, disease outcome may be more severe.

Breakdown in water supply safety (source, treatment and distribution) may lead to large-scale contamination and potentially to detectable disease outbreaks. In some cases, low-level, potentially repeated contamination may lead to significant sporadic disease, but public health surveillance is unlikely to identify contaminated drinking-water as the source.

Infectious diseases caused by pathogenic bacteria, viruses, protozoa and helminths are the most common and widespread health risk associated with drinking-water.

Waterborne pathogens have several properties that distinguish them from other drinking-water contaminants:

Pathogens can cause acute and also chronic health effects.

Some pathogens can grow in the environment.

Pathogens are discrete.

Pathogens are often aggregated or adherent to suspended solids in water, and pathogen concentrations vary in time, so that the likelihood of acquiring an in fective dose cannot be predicted from their average concentration in water.

Exposure to a pathogen resulting in disease depends upon the dose, invasiveness and virulence of the pathogen, as well as the immune status of the individual.

If infection is established, pathogens multiply in their host.

Certain waterborne pathogens are also able to multiply in food, beverages or warm water systems, perpetuating or even increasing the likelihood of infection.

Unlike many chemical agents, pathogens do not exhibit a cumulative effect.

Quantitative microbial risk assessment (QMRA), a mathematical framework for evaluating infectious risks from human pathogens, can assist in understanding and managing waterborne microbial hazards, especially those associated with sporadic disease.

7.1.1. Waterborne infections

The pathogens that may be transmitted through contaminated drinking-water are diverse in characteristics, behaviour and resistance. provides general information on pathogens that are of relevance for drinking-water supply management. Waterborne transmission of the pathogens listed has been confirmed by epidemio-logical studies and case histories. Part of the demonstration of pathogenicity involves reproducing the disease in suitable hosts. Experimental studies in which healthy adult volunteers are exposed to known numbers of pathogens provide information, but these data are applicable to only a part of the exposed population; extrapolation to more vulnerable subpopulations is an issue that remains to be studied in more detail. provides information on organisms that have been suggested as possible causes of waterborne disease but where evidence is inconclusive or lacking. The spectrum of pathogens may change as a result of host, pathogen and environmental changes such as fluctuations in human and animal populations, reuse of wastewater, changes in lifestyles and medical interventions, population movement and travel, selective pressures for new pathogens and mutants or recombinations of existing pathogens. The immunity of individuals also varies considerably, whether acquired by contact with a pathogen or influenced by such factors as age, sex, state of health and living conditions.

For pathogens transmitted by the faecal–oral route, drinking-water is only one vehicle of transmission. Contamination of food, hands, utensils and clothing can also play a role, particularly when domestic sanitation and hygiene are poor. Improvements in the quality and availability of water, excreta disposal and general hygiene are all important in reducing faecal–oral disease transmission.

Microbial drinking-water safety is not related only to faecal contamination. Some organisms grow in piped water distribution systems (e.g. Legionella), whereas others occur in source waters (e.g. guinea worm [Dracunculus medinensis]) and may cause outbreaks and individual cases. Some other microbes (e.g. toxic cyanobacteria) require specific management approaches, which are covered elsewhere in these Guidelines (see section 11.5).

Although consumption of contaminated drinking-water represents the greatest risk, other routes of transmission can also lead to disease, with some pathogens transmitted by multiple routes (e.g. adenovirus) (). Certain serious illnesses result from inhalation of water droplets (aerosols) in which the causative organisms have multiplied because of warm waters and the presence of nutrients. These include legionellosis, caused by Legionella spp., and illnesses caused by the amoebae Naegleria fowleri (primary amoebic meningoencephalitis) and Acanthamoeba spp. (amoebic meningitis, pulmonary infections).

Schistosomiasis (bilharziasis) is a major parasitic disease of tropical and sub-tropical regions that is transmitted when the larval stage (cercariae), which is released by infected aquatic snails, penetrates the skin. It is primarily spread by contact with water. Ready availability of safe drinking-water contributes to disease prevention by reducing the need for contact with contaminated water resources—for example, when collecting water to carry to the home or when using water for bathing or laundry.

It is conceivable that unsafe drinking-water contaminated with soil or faeces could act as a carrier of other infectious parasites, such as Balantidium coli (balantidiasis) and certain helminths (species of Fasciola, Fasciolopsis, Echinococcus, Spirometra, Ascaris, Trichuris, Toxocara, Necator, Ancylostoma, Strongyloides and Taenia solium). However, in most of these, the normal mode of transmission is ingestion of the eggs in food contaminated with faeces or faecally contaminated soil (in the case of Taenia solium, ingestion of the larval cysticercus stage in uncooked pork) rather than ingestion of contaminated drinking-water.

Other pathogens that may be naturally present in the environment may be able to cause disease in vulnerable subpopulations: the elderly or the very young, patients with burns or extensive wounds, those undergoing immunosuppressive therapy or those with acquired immunodeficiency syndrome (AIDS). If water used by such persons for drinking or bathing contains sufficient numbers of these organisms, they can produce various infections of the skin and the mucous membranes of the eye, ear, nose and throat. Examples of such agents are Pseudomonas aeruginosa and species of Flavobacterium, Acinetobacter, Klebsiella, Serratia, Aeromonas and certain “slow-growing” (non-tuberculous) mycobacteria (see the supporting document Pathogenic mycobacteria in water; Annex 1). A number of these organisms are listed in (and described in more detail in chapter 11).

Most of the human pathogens listed in (which are also described in more detail in chapter 11) are distributed worldwide; some, however, such as those causing outbreaks of cholera or guinea worm disease, are regional. Eradication of Dracunculus medinensis is a recognized target of the World Health Assembly (1991).

It is likely that there are pathogens not shown in that are also transmitted by water. This is because the number of known pathogens for which water is a transmission route continues to increase as new or previously unrecognized pathogens continue to be discovered (WHO, 2003).

7.1.2. Emerging issues

A number of developments are subsumed under the concept of “emerging issues” in drinking-water. Global changes, such as human development, population growth and movement and climate change (see section 6.1), exert pressures on the quality and quantity of water resources that may influence waterborne disease risks. Between 1972 and 1999, 35 new agents of disease were discovered, and many more have re-emerged after long periods of inactivity or are expanding into areas where they have not previously been reported (WHO, 2003). In 2003, a coronavirus was identified as the causative agent of severe acute respiratory syndrome, causing a multinational outbreak. Even more recently, influenza viruses originating from animal reservoirs have been transmitted to humans on several occasions, causing flu pandemics and seasonal epidemic influenza episodes (see the supporting document Review of latest available evidence on potential transmission of avian influenza (H5N1) through water and sewage and ways to reduce the risks to human health; Annex 1). Zoonotic pathogens make up 75% of the emerging pathogens and are of increasing concern for human health, along with pathogens with strictly human-to-human transmission. Zoonotic pathogens pose the greatest challenges to ensuring the safety of drinking-water and ambient water, now and in the future (see the supporting document Waterborne zoonoses; Annex 1). For each emerging pathogen, whether zoonotic or not, it should be considered whether it can be transmitted through water and, if so, which prevention and control measures can be suggested to minimize this risk.

7.1.3. Persistence and growth in water

Waterborne pathogens, such as Legionella, may grow in water, whereas other host-dependent waterborne pathogens, such as noroviruses and Cryptosporidium, cannot grow in water, but are able to persist.

Host-dependent waterborne pathogens, after leaving the body of their host, gradually lose viability and the ability to infect. The rate of decay is usually exponential, and a pathogen will become undetectable after a certain period. Pathogens with low persistence must rapidly find new hosts and are more likely to be spread by person-to-person contact or poor personal hygiene than by drinking-water. Persistence is affected by several factors, of which temperature is the most important. Decay is usually faster at higher temperatures and may be mediated by the lethal effects of ultraviolet (UV) radiation in sunlight acting near the water surface.

Relatively high amounts of biodegradable organic carbon, together with warm waters and low residual concentrations of chlorine, can permit growth of Legionella, Vibrio cholerae, Naegleria fowleri, Acanthamoeba and nuisance organisms in some surface waters and during water distribution (see also the supporting documents Heterotrophic plate counts and drinking-water safety and Legionella and the prevention of legionellosis; Annex 1).

Microbial water quality may vary rapidly and widely. Short-term peaks in pathogen concentration may increase disease risks considerably and may also trigger outbreaks of waterborne disease. Microorganisms can accumulate in sediments and are mobilized when water flow increases. Results of water quality testing for microbes are not normally available in time to inform management action and prevent the supply of unsafe water.

7.1.4. Public health aspects

Outbreaks of waterborne disease may affect large numbers of persons, and the first priority in developing and applying controls on drinking-water quality should be the control of such outbreaks. Available evidence also suggests that drinking-water can contribute to background rates of disease in non-outbreak situations, and control of drinking-water quality should therefore also address waterborne disease in the general community.

Experience has shown that systems for the detection of waterborne disease outbreaks are typically inefficient in countries at all levels of socioeconomic development, and failure to detect outbreaks is not a guarantee that they do not occur; nor does it suggest that drinking-water should necessarily be considered safe.

Some of the pathogens that are known to be transmitted through contaminated drinking-water lead to severe and sometimes life-threatening disease. Examples include typhoid, cholera, infectious hepatitis (caused by hepatitis A virus or hepatitis E virus) and disease caused by Shigella spp. and E. coli O157. Others are typically associated with less severe outcomes, such as self-limiting diarrhoeal disease (e.g. noroviruses, Cryptosporidium).

The effects of exposure to pathogens are not the same for all individuals or, as a consequence, for all populations. Repeated exposure to a pathogen may be associated with a lower probability or severity of illness because of the effects of acquired immunity. For some pathogens (e.g. hepatitis A virus), immunity is lifelong, whereas for others (e.g. Campylobacter), the protective effects may be restricted to a few months to years. In contrast, vulnerable subpopulations (e.g. the young, the elderly, pregnant women, the immunocompromised) may have a greater probability of illness or the illness may be more severe, including mortality. Not all pathogens have greater effects in all vulnerable subpopulations.

Not all infected individuals will develop symptomatic disease. The proportion of the infected population that is asymptomatic (including carriers) differs between pathogens and also depends on population characteristics, such as prevalence of immunity. Those with asymptomatic infections as well as patients during and after illness may all contribute to secondary spread of pathogens.

7.2. Health-based target setting

7.2.1. Health-based targets applied to microbial hazards

General approaches to health-based target setting are described in section 2.1 and chapter 3.

Sources of information on health risks may be from both epidemiology and QMRA, and typically both are employed as complementary sources. Development of health-based targets for many pathogens may be constrained by limitations in the data. Additional data, derived from both epidemiology and QMRA, are becoming progressively more available. Locally generated data will always be of great value in setting national targets.

Health-based targets may be set using a direct health outcome approach, where the waterborne disease burden is believed to be sufficiently high to allow measurement of the impact of interventions—that is, epidemiological measurement of reductions in disease that can be attributed to improvements in drinking-water quality.

Interpreting and applying information from analytical epidemiological studies to derive health-based targets for application at a national or local level require consideration of a number of factors, including the following questions:

Are specific estimates of disease reduction or indicative ranges of expected reductions to be provided?

How representative of the target population was the study sample in order to assure confidence in the reliability of the results across a wider group?

To what extent will minor differences in demographic or socioeconomic conditions affect expected outcomes?

More commonly, QMRA is used as the basis for setting microbial health-based targets, particularly where the fraction of disease that can be attributed to drinking-water is low or difficult to measure directly through public health surveillance or analytical epidemiological studies.

For the control of microbial hazards, the most frequent form of health-based target applied is performance targets (see section 3.3.3), which are anchored to a predetermined tolerable burden of disease and established by applying QMRA taking into account raw water quality. Water quality targets (see section 3.3.2) are typically not developed for pathogens; monitoring finished water for pathogens is not considered a feasible or cost-effective option because pathogen concentrations equivalent to tolerable levels of risk are typically less than 1 organism per 104–105 litres.

7.2.2. Reference pathogens

It is not practical, and there are insufficient data, to set performance targets for all potentially waterborne pathogens, including bacteria, viruses, protozoa and helminths. A more practical approach is to identify reference pathogens that represent groups of pathogens, taking into account variations in characteristics, behaviours and susceptibilities of each group to different treatment processes. Typically, different reference pathogens will be identified to represent bacteria, viruses, protozoa and helminths.

Selection criteria for reference pathogens include all of the following elements:

waterborne transmission established as a route of infection;

sufficient data available to enable a QMRA to be performed, including data on dose–response relationships in humans and disease burden;

occurrence in source waters;

persistence in the environment;

sensitivity to removal or inactivation by treatment processes;

infectivity, incidence and severity of disease.

Some of the criteria, such as environmental persistence and sensitivity to treatment processes, relate to the specific characteristics of the reference pathogens. Other criteria can be subject to local circumstances and conditions. These can include waterborne disease burden, which can be influenced by the prevalence of the organism from other sources, levels of immunity and nutrition (e.g. rotavirus infections have different outcomes in high- and low-income regions); and occurrence of the organism in source waters (e.g. presence of toxigenic Vibrio cholerae and Entamoeba histolytica is more common in defined geographical regions, whereas Naegleria fowleri is associated with warmer waters).

Selection of reference pathogens

The selection of reference pathogens may vary between different countries and regions and should take account of local conditions, including incidence and severity of waterborne disease and source water characteristics (see section 7.3.1). Evidence of disease prevalence and significance should be used in selecting reference pathogens. However, the range of potential reference pathogens is limited by data availability, particularly in regard to human dose–response models for QMRA.

Decision-making regarding selection of reference pathogens should be informed by all available data sources, including infectious disease surveillance and targeted studies, outbreak investigations and registries of laboratory-confirmed clinical cases. Such data can help identify the pathogens that are likely to be the biggest contributors to the burden of waterborne disease. It is these pathogens that may be suitable choices as reference pathogens and to consider when establishing health-based targets.

Viruses

Viruses are the smallest pathogens and hence are more difficult to remove by physical processes such as filtration. Specific viruses may be less sensitive to disinfection than bacteria and parasites (e.g. adenovirus is less sensitive to UV light). Viruses can persist for long periods in water. Infective doses are typically low. Viruses typically have a limited host range, and many are species specific. Most human enteric viruses are not carried by animals, although there are some exceptions, including specific strains of hepatitis E virus ().

Rotaviruses, enteroviruses and noroviruses have been identified as potential reference pathogens. Rotaviruses are the most important cause of gastrointestinal infection in children and can have severe consequences, including hospitalization and death, with the latter being far more frequent in low-income regions. There is a dose–response model for rotaviruses, but there is no routine culture-based method for quantifying infectious units. Typically, rotaviruses are excreted in very large numbers by infected patients, and waters contaminated by human waste could contain high concentrations. Occasional outbreaks of waterborne disease have been recorded. In low-income countries, sources other than water are likely to dominate.

Enteroviruses, including polioviruses and the more recently recognized parecho-viruses, can cause mild febrile illness, but are also important causative agents of severe diseases, such as paralysis, meningitis and encephalitis, in children. There is a dose–response model for enteroviruses, and there is a routine culture-based analysis for measuring infective particles. Enteroviruses are excreted in very large numbers by infected patients, and waters contaminated by human waste could contain high concentrations.

Noroviruses are a major cause of acute gastroenteritis in all age groups. Symptoms of illness are generally mild and rarely last longer than 3 days; however, infection does not yield lasting protective immunity. Hence, the burden of disease per case is lower than for rotaviruses. Numerous outbreaks have been attributed to drinking-water. A dose–response model has been developed to estimate infectivity for several norovirus strains, but no culture-based method is available.

Bacteria

Bacteria are generally the group of pathogens that is most sensitive to inactivation by disinfection. Some free-living pathogens, such as Legionella and non-tuberculous mycobacteria, can grow in water environments, but enteric bacteria typically do not grow in water and survive for shorter periods than viruses or protozoa. Many bacterial species that are infective to humans are carried by animals.

There are a number of potentially waterborne bacterial pathogens with known dose–response models, including Vibrio, Campylobacter, E. coli O157, Salmonella and Shigella.

Toxigenic Vibrio cholerae can cause watery diarrhoea. When it is left untreated, as may be the case when people are displaced by conflict and natural disaster, case fatality rates are very high. The infective dose is relatively high. Large waterborne outbreaks have been described and keep occurring.

Campylobacter is an important cause of diarrhoea worldwide. Illness can produce a wide range of symptoms, but mortality is low. Compared with other bacterial pathogens, the infective dose is relatively low and can be below 1000 organisms. It is relatively common in the environment, and waterborne outbreaks have been recorded.

Waterborne infection by E. coli O157 and other enterohaemorrhagic strains of E. coli is far less common than infection by Campylobacter, but the symptoms of infection are more severe, including haemolytic uraemic syndrome and death. The infective dose can be very low (fewer than 100 organisms).

Shigella causes over 2 million infections each year, including about 60 000 deaths, mainly in developing countries. The infective dose is low and can be as few as 10–100 organisms. Waterborne outbreaks have been recorded.

Although non-typhoidal Salmonella rarely causes waterborne outbreaks, S. Typhi causes large and devastating outbreaks of waterborne typhoid.

Protozoa

Protozoa are the group of pathogens that is least sensitive to inactivation by chemical disinfection. UV light irradiation is effective against Cryptosporidium, but Cryptosporidium is highly resistant to oxidizing disinfectants such as chlorine. Protozoa are of a moderate size (> 2 μm) and can be removed by physical processes. They can survive for long periods in water. They are moderately species specific. Livestock and humans can be sources of protozoa such as Cryptosporidium and Balantidium, whereas humans are the sole reservoirs of pathogenic Cyclospora and Entamoeba. Infective doses are typically low.

There are dose–response models available for Giardia and Cryptosporidium. Giardia infections are generally more common than Cryptosporidium infections, and symptoms can be longer lasting. However, Cryptosporidium is smaller than Giardia and hence more difficult to remove by physical processes; it is also more resistant to oxidizing disinfectants, and there is some evidence that it survives longer in water environments.

7.2.3. Quantitative microbial risk assessment

QMRA systematically combines available information on exposure (i.e. the number of pathogens ingested) and dose–response models to produce estimates of the probability of infection associated with exposure to pathogens in drinking-water. Epidemiological data on frequency of asymptomatic infections, duration and severity of illness can then be used to estimate disease burdens.

QMRA can be used to determine performance targets and as the basis for assessing the effects of improved water quality on health in the population and subpopulations. Mathematical modelling can be used to estimate the effects of low doses of pathogens in drinking-water on health.

Risk assessment, including QMRA, commences with problem formulation to identify all possible hazards and their pathways from sources to recipients. Human exposure to the pathogens (environmental concentrations and volumes ingested) and dose–response relationships for selected (or reference) organisms are then combined to characterize the risks. With the use of additional information (social, cultural, political, economic, environmental, etc.), management options can be prioritized. To encourage stakeholder support and participation, a transparent procedure and active risk communication at each stage of the process are important. An example of a risk assessment approach is outlined in and described below. For more detailed information on QMRA in the context of drinking-water safety, see the supporting document Quantitative microbial risk assessment: application for water safety management; Annex 1).

Problem formulation and hazard identification

All potential hazards, sources and events that can lead to the presence of microbial pathogens (i.e. what can happen and how) should be identified and documented for each component of the drinking-water system, regardless of whether or not the component is under the direct control of the drinking-water supplier. This includes point sources of pollution (e.g. human and industrial waste discharges) as well as diffuse sources (e.g. those arising from agricultural and animal husbandry activities). Continuous, intermittent or seasonal pollution patterns should also be considered, as well as extreme and infrequent events, such as droughts and floods.

The broader sense of hazards includes hazardous scenarios, which are events that may lead to exposure of consumers to specific pathogenic microorganisms. In this, the hazardous event (e.g. peak contamination of source water with domestic wastewater) may be referred to as the hazard.

As a QMRA cannot be performed for each of the hazards identified, representative (or reference) organisms are selected that, if controlled, would ensure control of all pathogens of concern. Typically, this implies inclusion of at least one bacterium, virus, protozoan or helminth. In this section, Campylobacter, rotavirus and Cryptosporidium have been used as example reference pathogens to illustrate application of risk assessment and calculation of performance targets.

Exposure assessment

Exposure assessment in the context of drinking-water consumption involves estimation of the number of pathogens to which an individual is exposed, principally through ingestion. Exposure assessment inevitably contains uncertainty and must account for variability of such factors as concentrations of pathogens over time and volumes ingested.

Exposure can be considered as a single dose of pathogens that a consumer ingests at a certain point in time or the total amount over several exposures (e.g. over a year). Exposure is determined by the concentration of pathogens in drinking-water and the volume of water consumed.

It is rarely possible or appropriate to directly measure pathogens in drinking-water on a regular basis. More often, concentrations in raw waters are assumed or measured, and estimated reductions—for example, through treatment—are applied to estimate the concentration in the water consumed. Pathogen measurement, when performed, is generally best carried out at the location where the pathogens are at highest concentration (generally raw waters). Estimation of their removal by sequential control measures is generally achieved by the use of indicator organisms such as E. coli for enteric bacterial pathogens (see ; see also the supporting document Water treatment and pathogen control in Annex 1).

The other component of exposure assessment, which is common to all pathogens, is the volume of unboiled water consumed by the population, including person-to-person variation in consumption behaviour and especially consumption behaviour of vulnerable subpopulations. For microbial hazards, it is important that the unboiled volume of drinking-water, both consumed directly and used in food preparation, is used in the risk assessment, as heating will rapidly inactivate pathogens. This amount is lower than that used for deriving water quality targets, such as chemical guideline values.

The daily exposure of a consumer to pathogens in drinking-water can be assessed by multiplying the concentration of pathogens in drinking-water by the volume of drinking-water consumed (i.e. dose). For the purposes of the example model calculations, drinking-water consumption was assumed to be 1 litre of unboiled water per day, but location-specific data on drinking-water consumption are preferred.

Dose–response assessment

The probability of an adverse health effect following exposure to one or more pathogenic organisms is derived from a dose–response model. Available dose–response data have been obtained mainly from studies using healthy adult volunteers. However, adequate data are lacking for vulnerable subpopulations, such as children, the elderly and the immunocompromised, who may suffer more severe disease outcomes.

The conceptual basis for the dose–response model is the observation that exposure to the described dose leads to the probability of infection as a conditional event: for infection to occur, one or more viable pathogens must have been ingested. Furthermore, one or more of these ingested pathogens must have survived in the host’s body. An important concept is the single-hit principle (i.e. that even a single pathogen may be able to cause infection and disease). This concept supersedes the concept of (minimum) infectious dose that is frequently used in older literature (see the supporting document Hazard characterization for pathogens in food and water; Annex 1).

In general, well-dispersed pathogens in water are considered to be Poisson distributed. When the individual probability of any organism surviving and starting infection is the same, the dose–response relationship simplifies to an exponential function. If, however, there is heterogeneity in this individual probability, this leads to the beta-Poisson dose–response relationship, where the “beta” stands for the distribution of the individual probabilities among pathogens (and hosts). At low exposures, such as would typically occur in drinking-water, the dose–response model is approximately linear and can be represented simply as the probability of infection resulting from exposure to a single organism (see the supporting document Hazard characterization for pathogens in food and water; Annex 1).

Risk characterization

Risk characterization brings together the data collected on exposure, dose–response and the incidence and severity of disease.

The probability of infection can be estimated as the product of the exposure to drinking-water and the probability that exposure to one organism would result in infection. The probability of infection per day is multiplied by 365 to calculate the probability of infection per year. In doing so, it is assumed that different exposure events are independent, in that no protective immunity is built up. This simplification is justified for low risks only, such as those discussed here.

Not all infected individuals will develop clinical illness; asymptomatic infection is common for most pathogens. The percentage of infected persons who will develop clinical illness depends on the pathogen, but also on other factors, such as the immune status of the host. Risk of illness per year is obtained by multiplying the probability of infection by the probability of illness given infection.

The low numbers in can be interpreted to represent the probability that a single individual will develop illness in a given year. For example, a risk of illness for Campylobacter of 2.2 × 10−4 per year indicates that, on average, 1 out of 4600 consumers would contract campylobacteriosis from consumption of drinking-water.

To translate the risk of developing a specific illness to disease burden per case, the metric disability-adjusted life year, or DALY, is used (see Box 3.1 in chapter 3). This metric reflects not only the effects of acute end-points (e.g. diarrhoeal illness) but also mortality and the effects of more serious end-points (e.g. Guillain-Barré syndrome associated with Campylobacter). The disease burden per case varies widely. For example, the disease burden per 1000 cases of rotavirus diarrhoea is 480 DALYs in low-income regions, where child mortality frequently occurs. However, it is 14 DALYs per 1000 cases in high-income regions, where hospital facilities are accessible to the great majority of the population (see the supporting document Quantifying public health risk in the WHO Guidelines for drinking-water quality; Annex 1). This considerable difference in disease burden results in far stricter treatment requirements in low-income regions for the same raw water quality in order to obtain the same risk (expressed as DALYs per person per year). Ideally, the health outcome target of 10−6 DALY per person per year in should be adapted to specific national situations. In , no accounting is made for effects on immunocompromised persons (e.g. cryptosporidiosis in patients with human immunodeficiency virus or AIDS), which is significant in some countries. Section 3.2 gives more information on the DALY metric and how it is applied to derive a reference level of risk.

Only a proportion of the population may be susceptible to some pathogens, because immunity developed after an initial episode of infection or illness may provide lifelong protection. Examples include hepatitis A virus and rotaviruses. It is estimated that in developing countries, all children above the age of 5 years are immune to rotaviruses because of repeated exposure in the first years of life. This translates to an average of 17% of the population being susceptible to rotavirus illness. In developed countries, rotavirus infection is also common in the first years of life, and the illness is diagnosed mainly in young children, but the percentage of young children as part of the total population is lower. This translates to an average of 6% of the population in developed countries being susceptible.

The uncertainty of the risk outcome is the result of the uncertainty and variability of the data collected in the various steps of the risk assessment. Risk assessment models should ideally account for this variability and uncertainty, although here we present only point estimates (see below).

It is important to choose the most appropriate point estimate for each of the variables. Theoretical considerations show that risks are directly proportional to the arithmetic mean of the ingested dose. Hence, arithmetic means of variables such as concentration in raw water, removal by treatment and consumption of drinking-water are recommended. This recommendation is different from the usual practice among microbiologists and engineers of converting concentrations and treatment effects to log values and making calculations or specifications on the log scale. Such calculations result in estimates of the geometric mean rather than the arithmetic mean, and these may significantly underestimate risk. Analysing site-specific data may therefore require going back to the raw data (i.e. counts and tested volumes) rather than relying on reported log-transformed values, as these introduce ambiguity.

Emergencies such as major storms and floods can lead to substantial deteriorations in source water quality, including large short-term increases in pathogen concentrations. These should not be included in calculations of arithmetic means. Inclusion will lead to higher levels of treatment being applied on a continuous basis, with substantial cost implications. It is more efficient to develop specific plans to deal with the events and emergencies (see section 4.4). Such plans can include enhanced treatment or (if possible) selection of alternative sources of water during an emergency.

7.2.4. Risk-based performance target setting

The process outlined above enables estimation of risk on a population level, taking account of raw water quality and impact of control. This can be compared with the reference level of risk (see section 3.2) or a locally developed tolerable risk. The calculations enable quantification of the degree of source protection or treatment that is needed to achieve a specified level of tolerable risk and analysis of the estimated impact of changes in control measures.

Performance targets are most frequently applied to treatment performance—that is, to determine the microbial reduction necessary to ensure water safety. A performance target may be applied to a specific system (i.e. formulated in response to local raw water characteristics) or generalized (e.g. formulated in response to raw water quality assumptions based on a certain type of source) (see also the supporting document Water treatment and pathogen control; Annex 1).

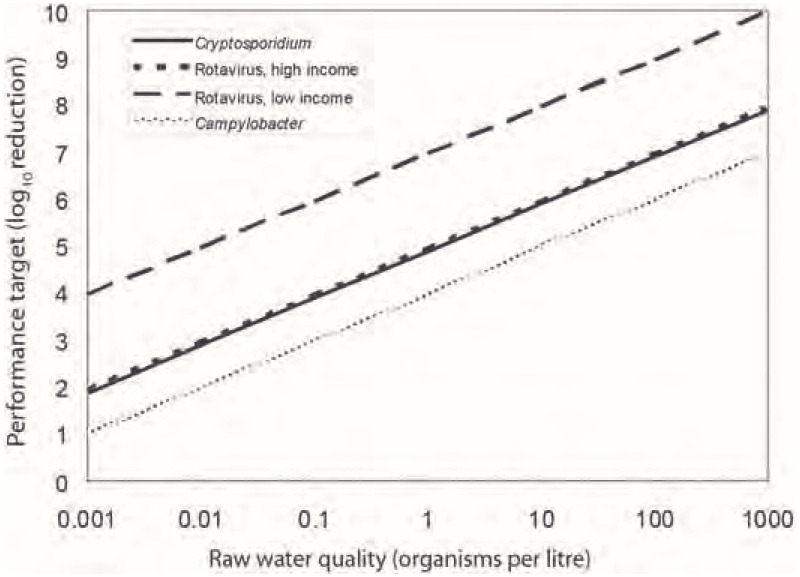

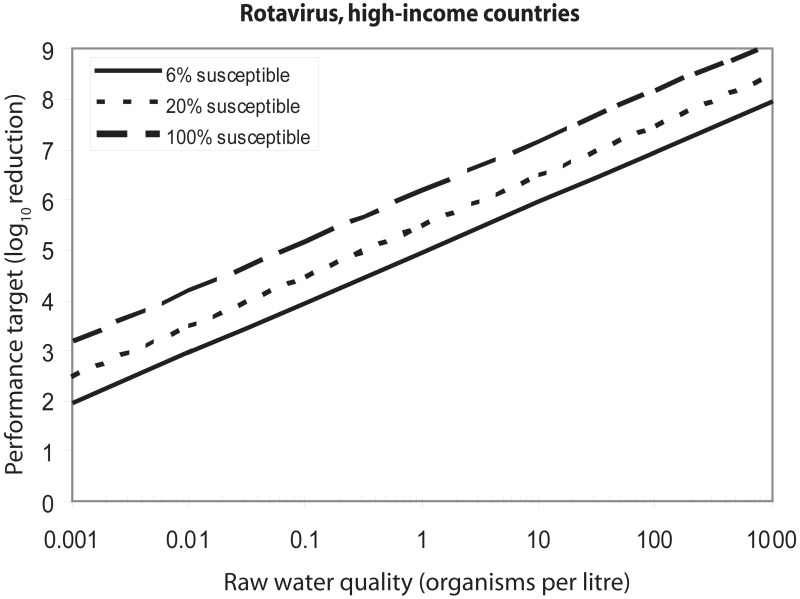

illustrates the targets for treatment performance for a range of pathogens occurring in raw water. For example, 10 microorganisms per litre of raw water will lead to a performance target of 5.89 logs (or 99.999 87% reduction) for Cryptosporidium or of 5.96 logs (99.999 89% reduction) for rotaviruses in high-income regions to achieve 10−6 DALY per person per year (see also below). The difference in performance targets for rotaviruses in high- and low-income countries (5.96 and 7.96 logs; ) is related to the difference in disease severity caused by this organism. In low-income countries, the child case fatality rate is relatively high, and, as a consequence, the disease burden is higher. Also, a larger proportion of the population in low-income countries is under the age of 5 and at risk for rotavirus infection.

The derivation of these performance targets is described in , which provides an example of the data and calculations that would normally be used to construct a risk assessment model for waterborne pathogens. The table presents data for representatives of the three major groups of pathogens (bacteria, viruses and protozoa) from a range of sources. These example calculations aim at achieving the reference level of risk of 10−6 DALY per person per year, as described in section 3.2. The data in the table illustrate the calculations needed to arrive at a risk estimate and are not guideline values.

7.2.5. Presenting the outcome of performance target development

presents some data from in a format that is more meaningful to risk managers. The average concentration of pathogens in drinking-water is included for information. It is not a water quality target, nor is it intended to encourage pathogen monitoring in finished water. As an example, a concentration of 1.3 × 10−5

Cryptosporidium per litre (see ) corresponds to 1 oocyst per 79 000 litres (see ). The performance target (in the row “Treatment effect” in ), expressed as a log10 reduction value, is the most important management information in the risk assessment table. It can also be expressed as a per cent reduction. For example, a 5.96 log10 unit reduction for rotaviruses corresponds to a 99.999 89% reduction.

7.2.6. Adapting risk-based performance target setting to local circumstances

The reference pathogens illustrated in the previous sections will not be priority pathogens in all regions of the world. Wherever possible, country- or site-specific information should be used in assessments of this type. If no specific data are available, an approximate risk estimate can be based on default values (see below).

accounts only for changes in water quality derived from treatment and not from source protection measures, which are often important contributors to overall safety, affecting pathogen concentration and/or variability. The risk estimates presented in also assume that there is no degradation of water quality in the distribution network. These may not be realistic assumptions under all circumstances, and it is advisable to take these factors into account wherever possible.

presents point estimates only and does not account for variability and uncertainty. Full risk assessment models would incorporate such factors by representing the input variables by statistical distributions rather than by point estimates. However, such models are currently beyond the means of many countries, and data to define such distributions are scarce. Producing such data may involve considerable efforts in terms of time and resources, but will lead to much improved insight into the actual raw water quality and treatment performance.

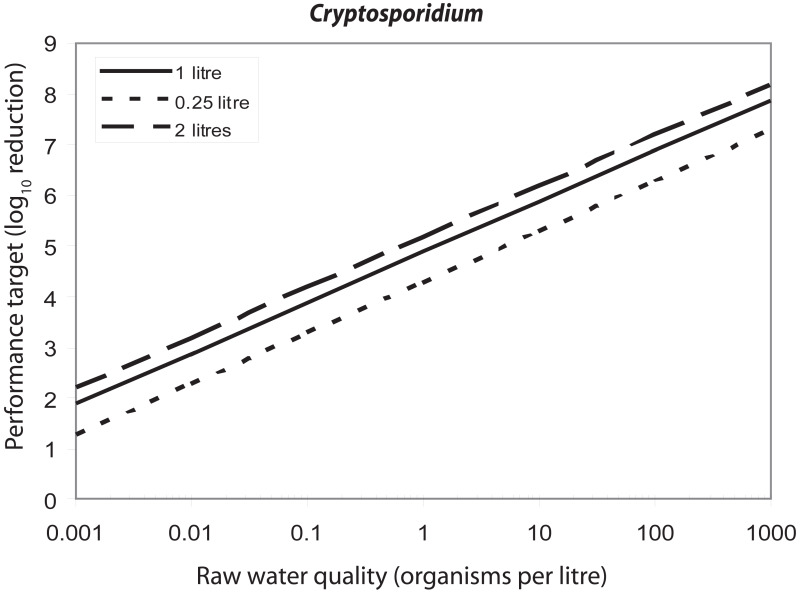

The necessary degree of treatment also depends on the values assumed for variables that can be taken into account in the risk assessment model. One such variable is drinking-water consumption. shows the effect of variation in the consumption of unboiled drinking-water on the performance targets for Cryptosporidium. If the raw water concentration is 1 oocyst per litre, the performance target varies between 4.3 and 5.2 log10 units if consumption values vary between 0.25 and 2 litres per day. Another variable is the fraction of the population that is susceptible. Some outbreak data suggest that in developed countries, a significant proportion of the population above 5 years of age may not be immune to rotavirus illness. shows the effect of variation in the susceptible fraction of the population. If the raw water concentration is 10 rotavirus particles per litre, the performance target increases from 5.96 to 7.18 as the susceptible fraction increases from 6% to 100%.

7.2.7. Health outcome targets

Health outcome targets that identify disease reductions in a community should be responded to by the control measures set out in water safety plans and associated water quality interventions at community and household levels. These targets would identify expected disease reductions in communities receiving the interventions.

The prioritization of water quality interventions should focus on those aspects that are estimated to contribute more than, for example, 5% of the burden of a given disease (e.g. 5% of total diarrhoea). In many parts of the world, the implementation of a water quality intervention that results in an estimated health gain of more than 5% would be considered extremely worthwhile. Directly demonstrating the health gains arising from improving water quality—as assessed, for example, by reduced E. coli counts at the point of consumption—may be possible where disease burden is high and effective interventions are applied and can be a powerful tool to demonstrate a first step in incremental drinking-water safety improvement.

Where a specified quantified disease reduction is identified as a health outcome target, it is advisable to undertake ongoing proactive public health surveillance among representative communities to measure the effectiveness of water quality interventions.

7.3. Occurrence and treatment of pathogens

As discussed in section 4.1, system assessment involves determining whether the drinking-water supply chain as a whole can deliver drinking-water quality that meets identified targets. This requires an understanding of the quality of source water and the efficacy of control measures, such as treatment.

7.3.1. Occurrence

An understanding of pathogen occurrence in source waters is essential, because it facilitates selection of the highest-quality source for drinking-water supply, determines pathogen concentrations in source waters and provides a basis for establishing treatment requirements to meet health-based targets within a water safety plan.

By far the most accurate way of determining pathogen concentrations in specific catchments and other water sources is by analysing pathogen concentrations in water over a period of time, taking care to include consideration of seasonal variation and peak events such as storms. Direct measurement of pathogens and indicator organisms in the specific source waters for which a water safety plan and its target pathogens are being established is recommended wherever possible, because this provides the best estimates of microbial concentrations. However, resource limitations in many settings preclude this. In the absence of measured pathogen concentrations, an alternative interim approach is to make estimations based on available data, such as the results of sanitary surveys combined with indicator testing.

In the case of absence of data on the occurrence and distribution of human pathogens in water for the community or area of implementation, concentrations in raw waters can be inferred from observational data on numbers of pathogens per gram of faeces representing direct faecal contamination or from numbers of pathogens per litre of untreated wastewater (). Data from sanitary surveys can be used to estimate the impact of raw or treated wastewater discharged into source waters. In treated wastewater, the concentrations of pathogens may be reduced 10- to 100-fold or more, depending on the efficiency of the treatment process. The concentrations of pathogens in raw waters can be estimated from concentrations of pathogens in wastewater and the fraction of wastewater present in source waters. In addition, some indicative concentrations of pathogens in source waters are given that were measured at specific locations, but these concentrations may differ widely between locations.

From , it may be clear that faecal indicator bacteria, such as E. coli, are always present at high concentrations in wastewater. Everybody sheds E. coli; nevertheless concentrations vary widely. Only infected persons shed pathogens; therefore, the concentrations of pathogens in wastewater vary even more. Such variations are due to shedding patterns, but they also depend on other factors, such as the size of the population discharging into wastewater and dilution with other types of wastewater, such as industrial wastewater. Conventional wastewater treatment commonly reduces microbial concentrations by one or two orders of magnitude before the wastewater is discharged into surface waters. At other locations, raw wastewater may be discharged directly, or discharges may occur occasionally during combined sewer overflows. Discharged wastewater is diluted in receiving surface waters, leading to reduced pathogen numbers, with the dilution factor being very location specific. Pathogen inactivation, die-off or partitioning to sediments may also play a role in pathogen reduction. These factors differ with the surface water body and climate. This variability suggests that concentrations of faecal indicators and pathogens vary even more in surface water than in wastewater.

Because of differences in survival, the ratio of pathogen to E. coli at the point of discharge will not be the same as farther downstream. A comparison of data on E. coli with pathogen concentrations in surface waters indicates that, overall, there is a positive relationship between the presence of pathogens in surface water and E. coli concentration, but that pathogen concentrations may vary widely from low to high at any E. coli concentration. Even the absence of E. coli is not a guarantee for the absence of pathogens or for pathogen concentrations to be below those of significance for public health.

The estimates based on field data in provide a useful guide to the concentrations of enteric pathogens in a variety of sources affected by faecal contamination. However, there are a number of limitations and sources of uncertainty in these data, including the following:

Although data on pathogens and E. coli were derived from different regions in the world, they are by far mostly from high-income countries.

There are concerns about the sensitivity and robustness of analytical techniques, particularly for viruses and protozoa, largely associated with the recoveries achieved by techniques used to process and concentrate large sample volumes typically used in testing for these organisms.

Numbers of pathogens were derived using a variety of methods, including culture-based methods using media or cells, molecular-based tests (such as polymerase chain reaction) and microscopy, and should be interpreted with care.

The lack of knowledge about the infectivity of the pathogens for humans has implications in risk assessment and should be addressed.

7.3.2. Treatment

Understanding the efficacy of control measures includes validation (see sections 2.2 and 4.1.7). Validation is important both in ensuring that treatment will achieve the desired goals (performance targets) and in assessing areas in which efficacy may be improved (e.g. by comparing performance achieved with that shown to be achievable through well-run processes). Water treatment could be applied in a drinking-water treatment plant (central treatment) to piped systems or in the home or at the point of use in settings other than piped supplies.

Central treatment

Waters of very high quality, such as groundwater from confined aquifers, may rely on protection of the source water and the distribution system as the principal control measures for provision of safe water. More typically, water treatment is required to remove or destroy pathogenic microorganisms. In many cases (e.g. poor quality surface water), multiple treatment stages are required, including, for example, coagulation, flocculation, sedimentation, filtration and disinfection. provides a summary of treatment processes that are commonly used individually or in combination to achieve microbial reductions (see also Annex 5). The minimum and maximum removals are indicated as log10 reduction values and may occur under failing and optimal treatment conditions, respectively.

The microbial reductions presented in are for broad groups or categories of microbes: bacteria, viruses and protozoa. This is because it is generally the case that treatment efficacy for microbial reduction differs among these microbial groups as a result of the inherently different properties of the microbes (e.g. size, nature of protective outer layers, physicochemical surface properties). Within these microbial groups, differences in treatment process efficiencies are smaller among the specific species, types or strains of microbes. Such differences do occur, however, and the table presents conservative estimates of microbial reductions based on the more resistant or persistent pathogenic members of that microbial group. Where differences in removal by treatment between specific members of a microbial group are great, the results for the individual microbes are presented separately in the table.

Treatment efficacy for microbial reduction can also differ when aggregating different treatment processes. Applying multiple barriers in treatment, for example in drinking-water treatment plants, may strengthen performance, as failure of one process does not result in failure of the entire treatment. However, both positive and negative interactions can occur between multiple treatment steps, and how these interactions affect the overall water quality and water treatment performance is not yet completely understood. In positive interactions, the inactivation of a contaminant is higher when two steps are occurring together than when each of the steps occurs separately—as happens, for example, when coagulation and sedimentation are operating under optimal conditions, and there is an increase in performance of rapid sand filters. In contrast, negative interactions can occur when failure in the first step of the treatment process could lead to a failure of the next process—for example, if coagulation fails to remove organic material, this could lead to a reduced efficacy of subsequent disinfection and a potential increase in DBPs. An overall assessment of the drinking-water treatment performance, as part of the implementation of the WSP, will assist in understanding the efficacy of the multiple treatment processes to ensure the safety of the drinking-water supply.

Further information about these water treatment processes, their operations and their performance for pathogen reduction in piped water supplies is provided in more detail in the supporting document Water treatment and pathogen control (Annex 1).

Household treatment

Household water treatment technologies are any of a range of devices or methods employed for the purposes of treating water in the home or at the point of use in other settings. These are also known as point-of-use or point-of-entry water treatment technologies (Cotruvo & Sobsey, 2006; Nath, Bloomfield & Jones, 2006; see also the supporting document Managing water in the home, Annex 1). Household water treatment technologies comprise a range of options that enable individuals and communities to treat collected water or contaminated piped water to remove or inactivate microbial pathogens. Many of these methods are coupled with safe storage of the treated water to preclude or minimize contamination after household treatment (Wright, Gundry & Conroy, 2003).

Household water treatment and safe storage have been shown to significantly improve water quality and reduce waterborne infectious disease risks (Fewtrell & Colford, 2004; Clasen et al., 2006). Household water treatment approaches have the potential to have rapid and significant positive health impacts in situations where piped water systems are not possible and where people rely on source water that may be contaminated or where stored water becomes contaminated because of unhygienic handling during transport or in the home. Household water treatment can also be used to overcome the widespread problem of microbially unsafe piped water supplies. Similar small technologies can also be used by travellers in areas where the drinking-water quality is uncertain (see also section 6.12).

Not all household water treatment technologies are highly effective in reducing all classes of waterborne pathogens (bacteria, viruses, protozoa and helminths). For example, chlorine is ineffective for inactivating oocysts of the waterborne protozoan Cryptosporidium, whereas some filtration methods, such as ceramic and cloth or fibre filters, are ineffective in removing enteric viruses. Therefore, careful consideration of the health-based target microbes to control in a drinking-water source is needed when choosing among these technologies.

Definitions and descriptions of the various household water treatment technologies for microbial contamination follow:

Chemical disinfection: Chemical disinfection of drinking-water includes any chlorine-based technology, such as chlorine dioxide, as well as ozone, some other oxidants and some strong acids and bases. Except for ozone, proper dosing of chemical disinfectants is intended to maintain a residual concentration in the water to provide some protection from post-treatment contamination during storage. Disinfection of household drinking-water in developing countries is done primarily with free chlorine, either in liquid form as hypochlorous acid (commercial household bleach or more dilute sodium hypochlorite solution between 0.5% and 1% hypochlorite marketed for household water treatment use) or in dry form as calcium hypochlorite or sodium dichloroisocyanurate. This is because these forms of free chlorine are convenient, relatively safe to handle, inexpensive and easy to dose. However, sodium trichloroisocyanurate and chlorine dioxide are also used in some household water treatment technologies. Proper dosing of chlorine for household water treatment is critical in order to provide enough free chlorine to maintain a residual during storage and use. Recommendations are to dose with free chlorine at about 2 mg/l to clear water (< 10 nephelometric turbidity units [NTU]) and twice that (4 mg/l) to turbid water (> 10 NTU). Although these free chlorine doses may lead to chlorine residuals that exceed the recommended chlorine residual for water that is centrally treated at the point of delivery, 0.2–0.5 mg/l, these doses are considered suitable for household water treatment to maintain a free chlorine residual of 0.2 mg/l in stored household water treated by chlorination. Further information on point-of-use chlorination can be found in the document

Preventing travellers’ diarrhoea: How to make drinking water safe (

WHO, 2005).

Disinfection of drinking-water with iodine, which is also a strong oxidant, is generally not recommended for extended use unless the residual concentrations are controlled, because of concerns about adverse effects of excess intake on the thyroid gland; however, this issue is being re-examined, because dietary iodine deficiency is a serious health problem in many parts of the world (see also

section 6.12 and

Table 6.1). As for central treatment, ozone for household water treatment must be generated on site, typically by corona discharge or electrolytically, both of which require electricity. As a result, ozone is not recommended for household water treatment because of the need for a reliable source of electricity to generate it, its complexity of generation and proper dosing in a small application, and its relatively high cost. Strong acids or bases are not recommended as chemical disinfectants for drinking-water, as they are hazardous chemicals that can alter the pH of the water to dangerously low or high levels. However, as an emergency or short-term intervention, the juices of some citrus fruits, such as limes and lemons, can be added to water to inactivate

Vibrio cholerae, if enough is added to sufficiently lower the pH of the water (probably to pH less than 4.5).

Membrane, porous ceramic or composite filters: These are filters with defined pore sizes and include carbon block filters, porous ceramics containing colloidal silver, reactive membranes, polymeric membranes and fibre/cloth filters. They rely on physical straining through a single porous surface or multiple surfaces having structured pores to physically remove and retain microbes by size exclusion. Some of these filters may also employ chemical antimicrobial or bacteriostatic surfaces or chemical modifications to cause microbes to become adsorbed to filter media surfaces, to be inactivated or at least to not multiply. Cloth filters, such as those of sari cloth, have been recommended for reducing Vibrio cholerae in water. However, these filters reduce only vibrios associated with copepods, other large crustaceans or other large eukaryotes retained by the cloth. These cloths will not retain dispersed vibrios or other bacteria not associated with copepods, other crustaceans, suspended sediment or large eukaryotes, because the pores of the cloth fabric are much larger than the bacteria, allowing them to pass through. Most household filter technologies operate by gravity flow or by water pressure provided from a piped supply. However, some forms of ultrafiltration, nanofiltration and reverse osmosis filtration may require a reliable supply of electricity to operate.

Granular media filters: Granular media filters include those containing sand or diatomaceous earth or others using discrete particles as packed beds or layers of surfaces over or through which water is passed. These filters retain microbes by a combination of physical and chemical processes, including physical straining, sedimentation and adsorption. Some may also employ chemically active antimicrobial or bacteriostatic surfaces or other chemical modifications. Other granular media filters are biologically active because they develop layers of microbes and their associated exopolymers on the surface of or within the granular medium matrix. This biologically active layer, called the schmutzdecke in conventional slow sand filters, retains microbes and often leads to their inactivation and biodegradation. A household-scale filter with a biologically active surface layer that can be dosed intermittently with water has been developed.

Solar disinfection: There are a number of technologies using solar irradiation to disinfect water. Some use solar radiation to inactivate microbes in either dark or opaque containers by relying on heat from sunlight energy. Others, such as the solar water disinfection or SODIS system, use clear plastic containers penetrated by UV radiation from sunlight that rely on the combined action of the UV radiation, oxidative activity associated with dissolved oxygen and heat. Other physical forms of solar radiation exposure systems also employ combinations of these solar radiation effects in other types of containers, such as UV-penetrable plastic bags (e.g. the “solar puddle”) and panels.

UV light technologies using lamps: A number of drinking-water treatment technologies employ UV light radiation from UV lamps to inactivate microbes. For household- or small-scale water treatment, most employ low-pressure mercury arc lamps producing monochromatic UV radiation at a germicidal wavelength of 254 nm. Typically, these technologies allow water in a vessel or in flow-through reactors to be exposed to the UV radiation from the UV lamps at sufficient dose (fluence) to inactivate waterborne pathogens. These may have limited application in developing countries because of the need for a reliable supply of electricity, cost and maintenance requirements.

Thermal (heat) technologies: Thermal technologies are those whose primary mechanism for the destruction of microbes in water is heat produced by burning fuel. These include boiling and heating to pasteurization temperatures (typically > 63 °C for 30 minutes when applied to milk). The recommended procedure for water treatment is to raise the temperature so that a rolling boil is achieved, removing the water from the heat and allowing it to cool naturally, and then protecting it from post-treatment contamination during storage (see the supporting document

Boil water;

Annex 1). The above-mentioned solar technologies using solar radiation for heat or for a combination of heat and UV radiation from sunlight are distinguished from this category.

Coagulation, precipitation and/or sedimentation: Coagulation or precipitation is any device or method employing a natural or chemical coagulant or precipitant to coagulate or precipitate suspended particles, including microbes, to enhance their sedimentation. Sedimentation is any method for water treatment using the settling of suspended particles, including microbes, to remove them from the water. These methods may be used along with cloth or fibre media for a straining step to remove the floc (the large coagulated or precipitated particles that form in the water). This category includes simple sedimentation (i.e. that achieved without the use of a chemical coagulant). This method often employs a series of three pots or other water storage vessels in series, in which sedimented (settled) water is carefully transferred by decanting daily; by the third vessel, the water has been sequentially settled and stored a total of at least 2 days to reduce microbes.

Combination (multiple-barrier) treatment approaches: These are any of the above technologies used together, either simultaneously or sequentially, for water treatment. These combination treatments include coagulation plus disinfection, media filtration plus disinfection or media filtration plus membrane filtration. Some are commercial single-use chemical products in the form of granules, powders or tablets containing a chemical coagulant, such as an iron or aluminium salt, and a disinfectant, such as chlorine. When added to water, these chemicals coagulate and flocculate impurities to promote rapid and efficient sedimentation and also deliver the chemical disinfectant (e.g. free chlorine) to inactivate microbes. Other combined treatment technologies are physical devices that include two or more stages of treatment, such as media or membrane filters or adsorbents to physically remove microbes and either chemical disinfectants or another physical treatment process (e.g. UV radiation) to kill any remaining microbes not physically removed by filtration or adsorption. Many of these combined household water treatment technologies are commercial products that can be purchased for household or other local use. It is important to choose commercial combination devices based on consideration of the treatment technologies that have been included in the device. It is also desirable to require that they meet specific microbial reduction performance criteria and preferably be certified for such performance by a credible national or international authority, such as government or an independent organization representing the private sector that certifies good practice and documented performance.

Estimated reductions of waterborne bacteria, viruses and protozoan parasites by several of the above-mentioned household water treatment technologies are summarized in . These reductions are based on the results of studies reported in the scientific literature. Two categories of effectiveness are reported: baseline removals and maximum removals. Baseline removals are those typically expected in actual field practice when done by relatively unskilled persons who apply the treatment to raw waters of average and varying quality and where there are minimum facilities or supporting instruments to optimize treatment conditions and practices. Maximum removals are those possible when treatment is optimized by skilled operators who are supported with instrumentation and other tools to maintain the highest level of performance in waters of predictable and unchanging quality (e.g. a test water seeded with known concentrations of specific microbes). It should be noted that there are differences in the log10 reduction value performance of certain water treatment processes as specified for household water treatment in and for central treatment in . These differences in performance by the same treatment technologies are to be expected, because central treatment is often applied to water that is of desirable quality for the treatment process, and treatment is applied by trained operators using properly engineered and operationally controlled processes. In contrast, household water treatment is often applied to waters having a range of water qualities, some of which are suboptimal for best technology performance, and the treatment is often applied without the use of specialized operational controls by people who are relatively untrained and unskilled in treatment operations, compared with people managing central water treatment facilities. Further details on these treatment processes, including the factors that influence their performance and the basis for the log10 reduction value performance levels provided in , can be found in the supporting documents Managing water in the home and Evaluating household water treatment options (Annex 1).

The values in do not account for post-treatment contamination of stored water, which may limit the effectiveness of some technologies where safe storage methods are not practised. The best options for water treatment at the household level will also employ means for safe storage, such as covered, narrow-mouthed vessels with a tap system or spout for dispensing stored water.

Validation, surveillance and certification of household water treatment and storage are recommended, just as they are for central water supplies and systems. The entities responsible for these activities for household water treatment systems may differ from those of central supplies. In addition, separate entities may be responsible for validation, independent surveillance and certification. Nevertheless, validation and surveillance as well as certification are critical for effective management of household and other point-of-use and point-of-entry drinking-water supplies and their treatment and storage technologies, just as they are for central systems (see sections 2.3 and 5.2.3).

Non-piped water treatment technologies manufactured by or obtained from commercial or other external sources should be certified to meet performance or effectiveness requirements or guidelines, preferably by an independent, accredited certification body. If the treatment technologies are locally made and managed by the household itself, efforts to document effective construction and use and to monitor performance during use are recommended and encouraged.

7.4. Microbial monitoring

Microbial monitoring can be undertaken for a range of purposes, including:

source water monitoring for identifying performance targets (see

sections 7.2 and

7.3.1);

collecting data for QMRA (see also

section 7.2.3 and the supporting document

Quantitative microbial risk assessment: application to water safety management,

Annex 1).

Owing to issues relating to complexity, sensitivity of detection, cost and timeliness of obtaining results, testing for specific pathogens is generally limited to assessing raw water quality as a basis for identifying performance targets and validation, where monitoring is used to determine whether a treatment or other process is effective in removing target organisms. Very occasionally, pathogen testing may be performed to verify that a specific treatment or process has been effective. However, microbial testing included in verification, operational and surveillance monitoring is usually limited to testing for indicator organisms.

Different methods can be employed for the detection of bacteria, viruses, protozoan parasites and helminths in water. The use of some methods, such as microscopy, relies on detection of the whole particle or organism. Other methods, such as molecular amplification using polymerase chain reaction (PCR), target the genomic material, deoxyribonucleic acid (DNA) or ribonucleic acid (RNA). Still other methods, such as immunological detection methods (e.g. enzyme-linked immunosorbent assay [ELISA]), target proteins. Culture-based methods, such as broth cultures or agar-based bacterial media and cell cultures for viruses and phages, detect organisms by infection or growth.

Culture in broth or on solid media is largely applied to determine the number of viable bacteria in water. The best known examples are culture-based methods for indicators such as E. coli. Viruses can be detected by several methods. Using cell culture, the number of infectious viruses in water can be determined. Alternatively, viral genomes can be detected by use of PCR. Protozoan parasites are often detected by immunomagnetic separation in combination with immunofluorescence microscopy. PCR can also be applied. Helminths are generally detected using microscopy.

In source investigation associated with waterborne infectious disease outbreaks, microbial hazards are generally typed by use of PCR, which can be followed by sequencing analysis to improve the precision of identification. One innovative approach is metagenome analysis (i.e. sequencing nucleic acid obtained directly from environmental samples). This can detect a multitude of microbial hazards in a water sample.

It is important to recognize that the different methods measure different properties of microorganisms. Culture-based methods detect living organisms, whereas microscopy, detection of nucleic acid and immunological assays measure the physical presence of microorganisms or components of them, and do not necessarily determine if what is detected is alive or infectious. This creates greater uncertainty regarding the significance of the human health risk compared with detection by culture-based methods. When using non-culture methods that do not measure in units indicative of culturability or infectivity, assumptions are often made about the fraction of pathogens or components detected that represent viable and infectious organisms.

The concept of using organisms such as E. coli as indicators of faecal pollution is a well-established practice in the assessment of drinking-water quality. The criteria determined for such faecal indicators are that they should not be pathogens themselves and they should:

be universally present in faeces of humans and animals in large numbers;

not multiply in natural waters;

persist in water in a similar manner to faecal pathogens;

be present in higher numbers than faecal pathogens;

respond to treatment processes in a similar fashion to faecal pathogens;

be readily detected by simple, inexpensive culture methods.

These criteria reflect an assumption that the same organism could be used as an indicator of both faecal pollution and treatment/process efficacy. However, it has become clear that one indicator cannot fulfil these two roles and that a range of organisms should be considered for different purposes (). For example, heterotrophic bacteria can be used as operational indicators of disinfection effectiveness and distribution system cleanliness;

Clostridium perfringens and coliphage can be used to validate the effectiveness of treatment systems.

Escherichia coli has traditionally been used to monitor drinking-water quality, and it remains an important parameter in monitoring undertaken as part of verification or surveillance. Thermotolerant coliforms can be used as an alternative to the test for E. coli in many circumstances. Water intended for human consumption should contain no faecal indicator organisms. In the majority of cases, monitoring for E. coli or thermotolerant coliforms provides a high degree of assurance because of their large numbers in polluted waters.

However, increased attention has focused on the shortcomings of traditional indicators, such as E. coli, as indicator organisms for enteric viruses and protozoa. Viruses and protozoa more resistant to conventional environmental conditions or treatment technologies, including filtration and disinfection, may be present in treated drinking-water in the absence of E. coli. Retrospective studies of waterborne disease outbreaks have shown that complete reliance on assumptions surrounding the absence or presence of E. coli may not ensure safety. Under certain circumstances, it may be desirable to include more resistant microorganisms, such as bacteriophages and/or bacterial spores, as indicators of persistent microbial hazards. Their inclusion in monitoring programmes, including control and surveillance programmes, should be evaluated in relation to local circumstances and scientific understanding. Such circumstances could include the use of source water known to be contaminated with enteric viruses and parasites or where such contamination is suspected as a result of the impacts of human and livestock waste.

Further discussion on indicator organisms is contained in the supporting document Assessing microbial safety of drinking water (Annex 1).

presents guideline values for verification of the microbial quality of drinking-water. Individual values should not be used directly from the table. The guideline values should be used and interpreted in conjunction with the information contained in these Guidelines and other supporting documentation.

A consequence of variable susceptibility to pathogens is that exposure to drinking-water of a particular quality may lead to different health effects in different populations. For derivation of national standards, it is necessary to define reference populations or, in some cases, to focus on specific vulnerable subpopulations. National or local authorities may wish to apply specific characteristics of their populations in deriving national standards.

7.5. Methods of detection of faecal indicator organisms

Analysis for faecal indicator organisms provides a sensitive, although not the most rapid, indication of pollution of drinking-water supplies. Because the growth medium and the conditions of incubation, as well as the nature and age of the water sample, can influence the species isolated and the count, microbiological examinations may have variable accuracy. This means that the standardization of methods and of laboratory procedures is of great importance if criteria for the microbial quality of water are to be uniform in different laboratories and internationally.

International standard methods should be evaluated under local circumstances before being adopted. Established standard methods are available, such as those of the International Organization of Standardization (ISO) () or methods of equivalent efficacy and reliability. It is desirable that established standard methods be used for routine examinations. Whatever method is chosen for detection of E. coli or thermotolerant coliforms, the importance of “resuscitating” or recovering environmentally damaged or disinfectant-damaged strains must be considered.

7.6. Identifying local actions in response to microbial water quality problems and emergencies